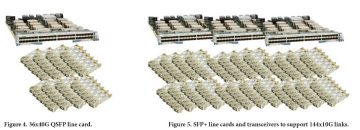

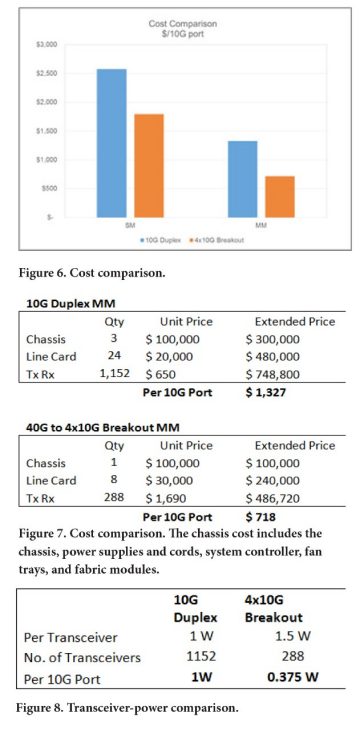

Port-breakout deployments have become a popular networking tool and are driving the large industry demand for parallel optics transceivers. Today, port breakout is commonly used to operate 40/100Gbps (40/100G) parallel optics transceivers as four 10/25Gbps (10/25G) links. Breaking out parallel ports is beneficial for multiple applications, such as building large scale spine-and-leaf networks and enabling today’s high density 10/25G networks. The latter task is the focus of this article.

The Cisco Visual Networking Index predicts that Internet Protocol (IP) traffic will increase at a compound annual growth rate (CAGR) of 22 percent from 2015 to 2020, driven by explosive growth in wireless and mobile devices. All of that data is translating to growth in both enterprise and cloud data centers. This growth explains why data centers are often the earliest adopters of the fastest network speeds and are consistently searching for solutions that preserve rack and floor space. Just a few years ago, a density revolution occurred in the structured-cabling world, doubling the density of passive data center optical hardware to 288 fiber ports of either LC or MTP® connectors in a 4U housing. This increase has now carried over to the switching side, where deployment of a port breakout configuration can as much as triple the port capacity of a switch card operating in a 10G or 25G network.